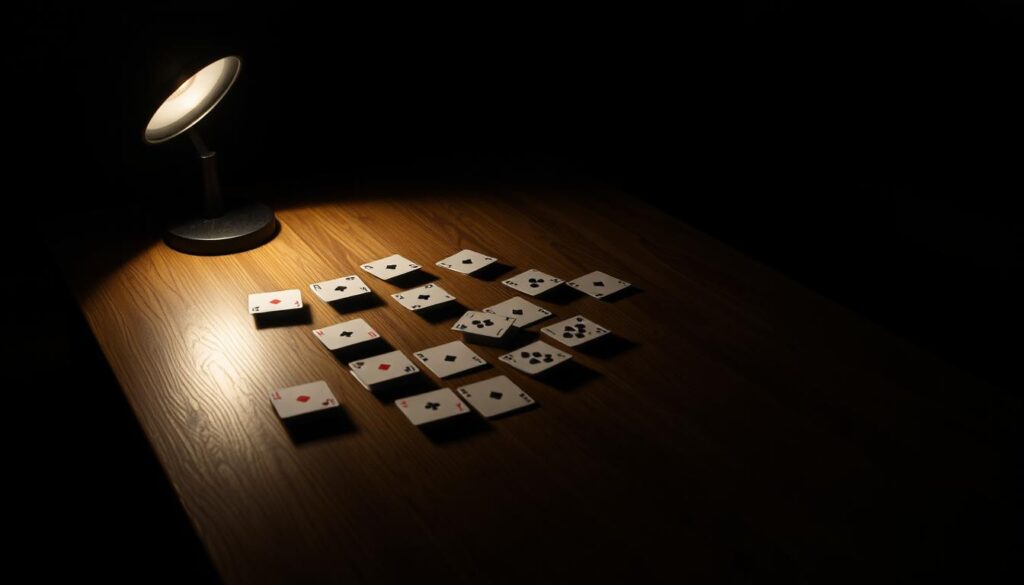

Do you really pick, or are you guided toward someone else’s win?

You face options every day, but many menus are engineered to steer you.

The tactic hides behind apparent freedom. Companies and platforms present near-identical options so you argue over labels while they keep control. This is dark psychology at work: keep choices open, nudge one path, and let you justify the pick.

Here are quick signs and moves to watch for:

- Tactics: defaults, visual salience, timing nudges.

- Examples: checkout displays, algorithm feeds, bundled pricing.

- Warning signs: near-identical options, limited time pressure, hidden alternatives.

- Defenses: ask who benefits, seek off-menu alternatives, pause before deciding.

Recognizing this frame reclaims your agency. Throughout this guide you’ll learn how the script works across the consumer world and politics, and how to break engineered funnels.

Want the deeper playbook? Get The Manipulator’s Bible – the official guide to dark psychology. https://themanipulatorsbible.com/

Key Takeaways

- What seems like free choice can be a curated menu designed to keep others in control.

- Small design cues—defaults, layout, timing—nudge your decisions without overt force.

- Brands and groups use similar options to steer outcomes while keeping you engaged.

- Spot the signs: identical variants, urgency, and hidden off-menu options.

- Defend your time and attention by questioning who gains from your selection.

What Illusion of Choice Manipulation Really Means in Dark Psychology

Many systems let you pick while quietly steering the final result. That setup promises control but often hands you curated menus that channel your attention. You still act, yet the path was shaped upstream.

Definition and power: The core frame lets you feel agency while an architect preselects outcomes. This lowers resistance because you say “I chose it,” not “I was pushed.”

- Mechanism: defaults, layout, sequencing, and curated options narrow what you see and steer your decision.

- Psychology: the system exploits preexisting beliefs and preferences, so you defend the pick afterward.

- Media effect: algorithmic echo chambers amplify what people already think, raising the perceived consensus and boosting influence.

- Everyday example: tiered pricing that makes one plan feel “smart” while outcomes remain similar.

Warning signs: near-identical variants, urgency cues, and visible popularity signals from your group.

Defenses: widen the frame before you choose—ask who benefits, what need this solves, and whether the best option sits off-menu.

Where It Thrives: Platforms, Environments, and Groups That Influence You

Your daily feeds and store shelves quietly shape which path you take. Algorithms, shelf layouts, and social signals work together to convert attention into action.

Social platforms are engineered environments: algorithms favor alignment and keep people scrolling. Echo chambers filter dissent and harden your preferences.

Social media architectures

Echo chambers amplify what your group already believes. Influencer voices then simulate popularity, turning attention into buys or votes.

- Examples: curated feeds, trending tags, high-follower endorsements.

- Warning signs: identical viewpoints, recycled sources, and pressure to repost.

Marketplace “variety” and FOMO

Abundance hides sameness. Rows of near-identical products create an illusion choice where options multiply but real value does not.

- Example: streaming overload—dozens of services that steal your time while you compare rather than watch.

- Checkout traps: eye-level placement, impulse racks, and low-stock badges that push fast decisions.

Warning signs: too many near-identical options, social proof without sources, and urgent cues that rush your decision.

Counter-move: precommit to what you actually need, cap the platforms you use, and apply a 24-hour rule before upgrading or subscribing.

how technology hijacks people’s minds

What Science Says About Choice, Control, and Belief

Data from large studies shows that choice acts like a channel for preexisting beliefs.

Key experiment: across more than 10,000 participants, Yale and Wharton researchers tested simple lotteries and box selections.

Finding: giving people a choice did not raise their confidence or perceived odds unless they already held a belief tied to a number or box.

Choice ≠ control. Choice lets you act on a story you already carry. It does not create mastery where none exists.

Research insight

- Mechanism: beliefs provide the engine; choice provides the steering wheel.

- Effect: options only feel meaningful when prior beliefs assign them value.

- Social angle: in a group setting, shared myths make those beliefs louder and choices feel safer.

| Scenario | Perceived Control | Recommended Move |

|---|---|---|

| Random assignment | Low but honest | Prefer assignment when outcomes are random |

| Free choice with no data | Illusory control | Delay your decision; seek evidence |

| Choice backed by belief | High perceived control | Check whether belief matches facts |

Actionable takeaways

- Pause: before you select, ask whether your decision tracks data or a familiar story.

- Test: if outcomes are equivalent, accept assignment to avoid biased ownership.

- Watch your group cues: shared endorsements can morph belief into buying power.

Illusion of Choice Manipulation: Tactics, Examples, and How to Regain Control

What looks like a fair lineup can be engineered to steer your next move. Below are clear tactics you will face, real-world examples, signs that choices are staged, and a compact defense playbook you can use today.

Common manipulative tactics you’ll face

- False dilemmas: reduce complex issues to two options to corner your decision.

- Scarcity scripts: “Limited offer” and countdowns that rush your time.

- Card-on-file trials: easy signup, hard cancellation—friction keeps people enrolled.

- Perpetual upgrades: endless updates that lock you into a product world.

- Shelf & checkout design: placement and pacing push impulse choices in-store or online.

Everyday examples that steer your preferences

- Streaming bundles: too many options create fatigue so you keep subscribing instead of watching.

- Supermarket aisles: dozens of similar cereals give a false sense of variety.

- Influencer endorsements: loud voices simulate group consensus and shape preferences.

- Political frames: narrowed menus define what counts as acceptable inside your group identity.

Warning signs your choices are engineered

- Artificial urgency that overrides comparison.

- All listed options reward the presenter, not you.

- Over-personalized feeds hide true alternatives and amplify your group bubble.

- Post-purchase regret and a persistent “there’s better” itch.

Defense playbook to reclaim decision-making power

- Reframe the set: add “do nothing” and seek third-way alternatives.

- Slow the decision: use a 24-hour rule for non-essentials to align time with value.

- Audit beliefs: separate stories from data; verify claims across independent sources.

- Control your environment: unsubscribe, unfollow, and change shopping routes to cut impulse triggers.

- Commitment rules: fixed upgrade cycles, budget thresholds, and cancel dates stop spur-of-the-moment buys.

Conclusion

Big takeaway: The menu is power. When others set the menu and the clock, your choice can serve their plan, not yours.

Recognize the pattern: abundance without difference, urgency without cause, and curated group consensus without scrutiny are red flags.

Defend your agency: expand your choices (add “do nothing”), slow down decisions, and verify claims across independent sources in a noisy world.

Lead your group: set norms that favor facts over hype and protect the people close to you from engineered funnels.

Own the frame: real freedom is choosing the game, not just the option. Learn to spot the table-setters and reclaim the rules.

For a deeper playbook, read this guide on deconstructing the menu or get The Manipulator’s Bible — the official guide to dark psychology: https://themanipulatorsbible.com/.

FAQ

What does “the illusion of choice” mean in dark psychology?

It refers to situations where you believe you have multiple options, but those options are curated to steer your decision. You retain a sense of control, yet the range and framing of choices nudges you toward a predetermined outcome.

How do curated options shape your decisions without removing choice?

Curators, platforms, or influencers present a limited set of attractive alternatives. By controlling presentation, order, and context, they trigger cognitive shortcuts like anchoring and social proof so you pick what was intended while feeling autonomous.

What psychological factors make you vulnerable to this tactic?

Preexisting beliefs, cognitive biases, and a desire for efficiency make you rely on heuristics. Low confidence and time pressure increase reliance on presented cues, while confirmation bias pushes you toward options that match your worldview.

Where do you most commonly encounter engineered choices?

You’ll see them on social media algorithms, e-commerce sites, news feeds, and curated subscription services. Environments that personalize content or highlight bestsellers concentrate the options you see and amplify certain outcomes.

How do social media architectures create echo chambers and influence you?

Platforms use engagement signals and network data to show content aligned with your preferences. That reinforces beliefs and limits exposure to dissenting views, making it easier for influencers and advertisers to guide your decisions.

Why can more options increase pressure and reduce autonomy in marketplaces?

When vendors present many similar choices, you experience decision fatigue and fear of missing out. That anxiety makes you rely on cues like scarcity, ratings, or popularity—tools sellers use to channel you to a target product.

What does research say about choice and perceived control?

Studies show choice alone doesn’t create real control; your beliefs determine how empowered you feel. Options enable you to act on those beliefs, but manipulation of options changes which beliefs you can easily act on.

What common manipulative tactics should you watch for?

Look for limited-time offers, anchored pricing, framed comparisons, curated recommendation lists, scarcity cues, and selective testimonials. These techniques shape perception and nudge behavior while preserving a surface sense of freedom.

Can you give everyday examples that look like freedom but steer your preferences?

Examples include streaming services offering “recommended” bundles, online retailers highlighting “frequently bought together” items, political messaging using curated polls, and app stores pushing featured apps—each narrows the realistic set of choices you consider.

What warning signs indicate your choices are being engineered?

Warning signs include repetitive exposure to similar options, sudden popularity spikes without clear reason, aggressive scarcity messaging, and recommendations that align perfectly with your recent activity. If you feel rushed or the range seems oddly narrow, be skeptical.

How can you reclaim decision-making power against these tactics?

Slow down and widen your information sources. Actively seek contrary viewpoints, compare uncurated alternatives, set decision rules (like cooling-off periods), and use privacy tools that limit tracking. Those steps restore genuine agency.

How do personal beliefs influence whether a choice gives you control?

Your beliefs frame what options feel meaningful. When your values are clear, choices enable purposeful action. If curated options conflict with your values, the appearance of control is hollow—recognizing the misalignment lets you opt out.

Are some groups or platforms more likely to engineer choices than others?

Commercial platforms, political networks, and tight-knit online communities are the most active. They rely on engagement and conversion metrics, so they continuously refine how options are presented to maximize predictable outcomes.

What tools or strategies can organizations use ethically instead of engineering choices?

Ethical approaches include transparent algorithms, clear labeling of sponsored content, providing comprehensive option sets, and allowing easy opt-out. These practices preserve your agency while helping you make informed decisions.